Learn about AI

Learn about AI through a series of hands-on interactive tutorials and step-by-step-videos that detail general concepts for imaging AI

Check out the FDA-cleared AI models available for clinical use

Introductions

Overview

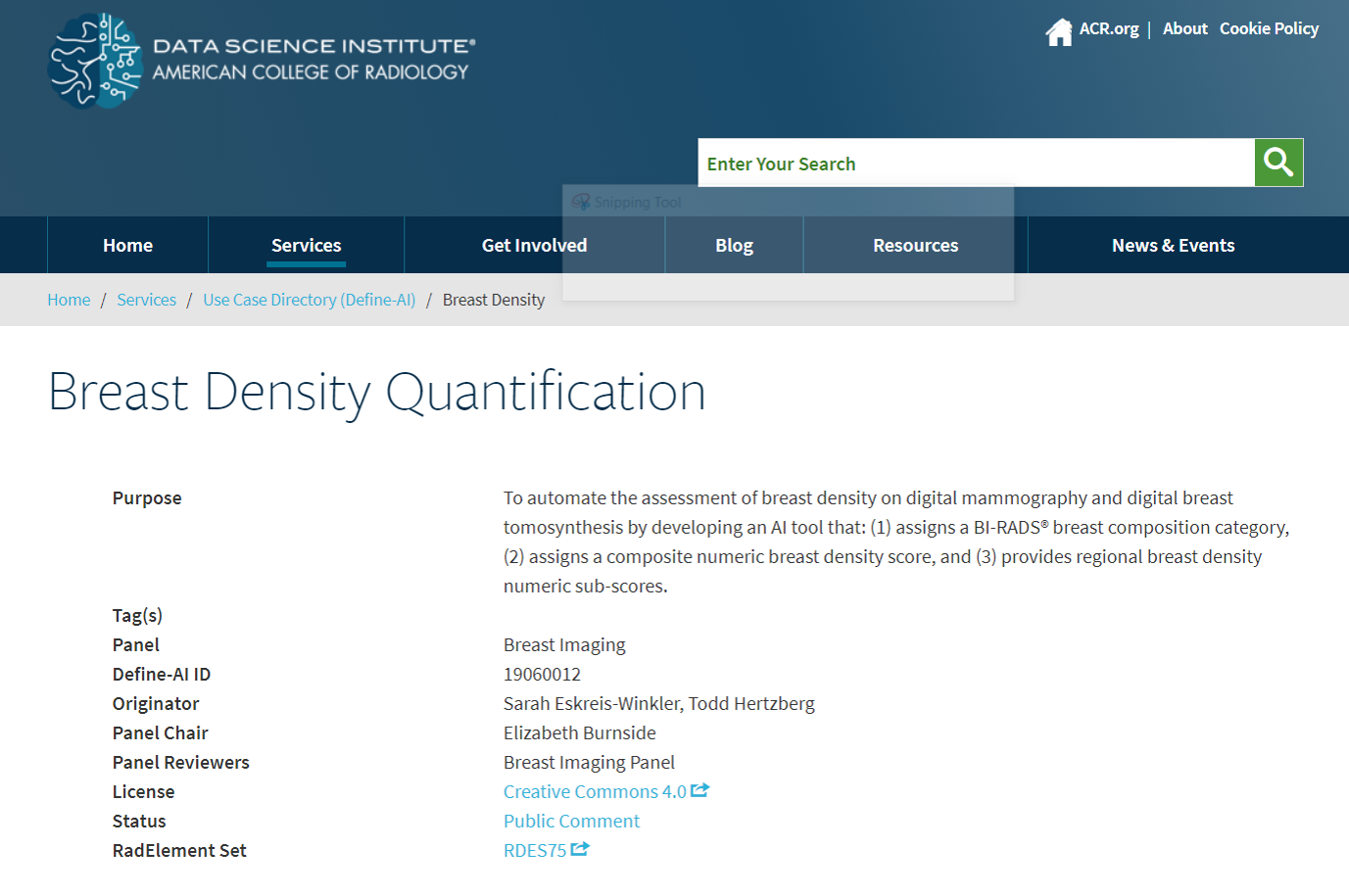

Definition of Use Case

Democratization of AI

Modules

How does a radiologist do AI?

What can go wrong?

Issues of PHI

How can data scientists help radiologists?

Data Accessibility

Discrimination and Calibration

Global Health and AI

ROC vs. PRC

Ophthalmologykeyboard_arrow_down

Autonomous AI and Health Equity

Definekeyboard_arrow_down

Define Tutorial

Instructions

- Browse defined AI use cases.

- Search, filter, or sort through all of the use cases.

- Click on the use case name under the USE CASE column to comment on a published use case.

- Click on REQUEST TO DRAFT A USE CASE under the REQUEST column to request to draft a use case that is not yet published.

- Click on SUBMIT A NEW USE CASE and fill out the form to submit a new AI use case for the ACR to review.

Annotatekeyboard_arrow_down

Annotate

Annotate Tutorial

How to annotate bounding boxes

Instructions

- Select a use case.

- Select a dataset to annotate.

- Use the image viewing tools to examine the scan.

- Classify each image by selecting one of the categories.

- Click NEXT to save your annotation and view the next scan.

- Click FINISH to finish annotating and return to the home page.

Createkeyboard_arrow_down

Architecture

Overfitting

Cost function

Epochs

Pre-processing

Confusion Matrix Details vs. Accuracy

Kappa

Create Tutorial: Part1

Create Tutorial: Part2

Equal Sampling

Instructions

-

Define the problem.

- Select a body area.

- Select a modality.

- Select an AI use case.

-

Prepare the data.

- Select the training dataset.

- Select the Augmentation.

- Click START PREPROCESSING to prepare the data.

-

Configure your model.

- Select the model architecture.

- Select the data sampling method.

- Select the model loss function.

- Select if the model is pre-trained or not.

- Select if the model has early stopping or not.

- Click TRAIN AND TEST to train and test your model.

- Click RUN PREDICTION to view the model’s prediction.

- Repeat the process to create another model. You can continue to add models to your screen.

- Click REMOVE to remove a model from your screen.

Terminology

Training Dataset Size

The size of the training dataset can affect the accuracy of the model. In general, the model will be more accurate as the training dataset size increases.

Augmentation

Apply additional transformations, such as rotations, flips, or stretching, to images input to the network.

- None: Do not transform images.

- Random Flips/Rotations: Add random flips and rotations to the scans before the model sees them.

Architectures

Structure of the neural network.

- ResNet: Type of architecture developed in 2015 for the ImageNet Large Scale Visual Recognition Challenge that introduced skipped connections between layers and won first place in image classification, detection, and localization.

- VGGNet: Type of architecture developed in 2014 for the ImageNet Large Scale Visual Recognition Challenge that won first place on image localization.

- InceptionNet: Type of architecture whose innovation was to attempt to process information at different spatial scales. It also used new methods to make neural networks "deeper" (more complex) while still learning broad, generalizable concepts about its chosen task.

- DenseNet: Type of architecture whose innovation in neural networks allowed them to maintain high levels of complexity and classification ability, while greatly reducing the computational time and memory required to classify a single case.

Sampling Methods

The ratio of each class that the model sees at each training step

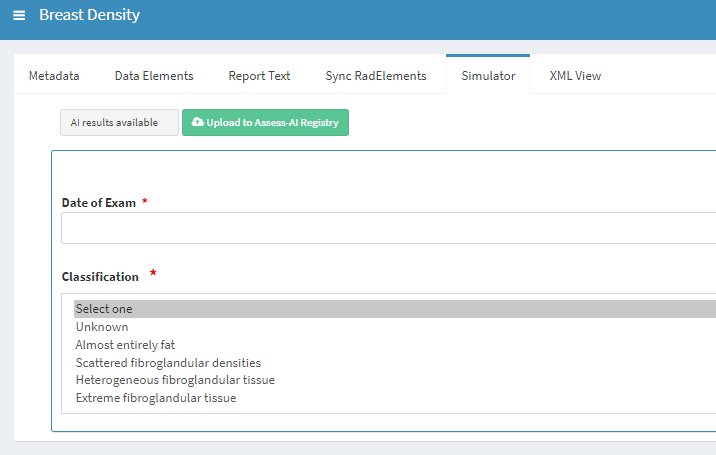

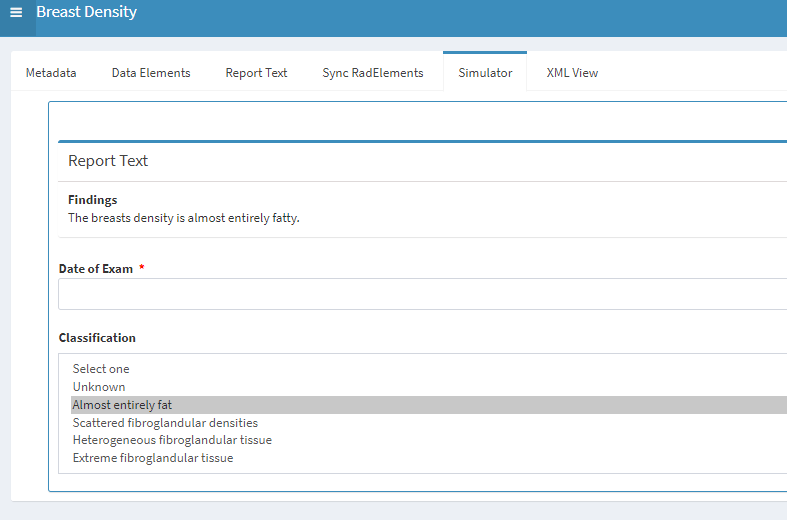

- Equal Class Ratios: Sample the studies so that each class is represented equally – 25% almost entirely fat, 25% scattered fibroglandular densities etc.

- Random Sampling: Sample the studies randomly, in the distribution they are found in the original dataset.

Loss Functions

How the model knows what to learn

-

Mean Absolute Error

- Treat all successes differently depending on confidence, treat all failures differently depending on confidence.

- If the model got the right category and was very confident in the choice, then it was a large success. If the model got the right category and was not confident in the choice, then it was a small success. If the model got the wrong category and was not confident in the choice, then it was a small failure. If the model got the wrong category and was very confident in the choice, then it was a large failure.

- Assume that the distance between the categories is the same.

-

Categorical Cross-Entropy

- Treat all successes differently depending on confidence, treat all failures equally.

- If the model got the right category and was very confident in the choice, then it was a large success. If the model got the right category and was not confident in the choice, then it was a small success. If the model got the wrong category, then it was a failure and all failures are treated the same.

-

Mean-Squared Error

- Treat all successes differently depending on confidence, treat all failures differently depending on confidence.

- If the model got the right category and was very confident in the choice, then it was a large success. If the model got the right category and was not confident in the choice, then it was a small success. If the model got the wrong category and was not confident in the choice, then it was a small failure. If the model got the wrong category and was very confident in the choice, then it was a large failure.

- Heavily penalize large failures and assume that the distance between the categories is the same.

Pretraining

Whether or not the model has been trained on a different task before training on a new task

- Random Initialization: Randomly start the model, it has not been pretrained on another task.

- Pretrained Weights (ImageNet): Start with a pretrained model that was previously trained to classify natural images, such as photos of cars, dogs, and buildings.

Early Stopping

If there is early stopping, the model will stop learning once its performance is no longer improving.

Kappa Score

Statistic that measures the agreement between two raters that classify items into mutually exclusive categories and takes into account the probability of random agreement. If two raters are in complete agreement, then the kappa score could be close to 1. If two raters are in complete disagreement, then the kappa score could be close to 0 or even negative if they are agreeing less than the probability of random agreement.

AUC Score

(Area under the curve of the Receiver operating characteristic) Performance measurement for classification problems that measures how well a model separates categories. An AUC score close to 1 means that the model is good at separating categories; it classifies 0s as 0s and 1s as 1s. An AUC score close to 0 means that the model is separating into opposite categories; it classifies 0s as 1s and 1s as 0s. An AUC score close to 0.5 means that the model is no better at separating categories than random chance.

Confusion Matrix

A table that is used to display the performance of a classification model on a test dataset as compared to the ground truth. Each square has a unique row and column combination. The square in row 1 and column 2 says that the ground truth classified those test data points as category 1 but the classification model classified those same test data points as category 2. All of the squares along the diagonal of the table display the number of test cases when the ground truth and classification model are in agreement. All squares off the diagonal represent any disagreement between the ground truth and classification model; the further away from the diagonal, the larger the disagreement. If there is complete agreement between the ground truth and classification model, then all of the off diagonal squares will be zero. If there is complete disagreement between the ground truth and classification model, then all of the diagonal squares will be zero.

Evaluatekeyboard_arrow_down

Transfer learning

Evaluate Tutorial

Instructions

- Select an AI use case.

- Select a validation dataset.

- Click on the models you want to evaluate, you may click on more than one.

- Click EVALUATE MODELS to view the test results.

- Click SHOW MODELS to change your selections.

Terminology

Kappa Score

Statistic that measures the agreement between two raters that classify items into mutually exclusive categories and takes into account the probability of random agreement. If two raters are in complete agreement, then the kappa score could be close to 1. If two raters are in complete disagreement, then the kappa score could be close to 0 or even negative if they are agreeing less than the probability of random agreement.

AUC Score

(Area under the curve of the Receiver operating characteristic) Performance measurement for classification problems that measures how well a model separates categories. An AUC score close to 1 means that the model is good at separating categories; it classifies 0s as 0s and 1s as 1s. An AUC score close to 0 means that the model is separating into opposite categories; it classifies 0s as 1s and 1s as 0s. An AUC score close to 0.5 means that the model is no better at separating categories than random chance.

Confusion Matrix

A table that is used to display the performance of a classification model on a test dataset as compared to the ground truth. Each square has a unique row and column combination. The square in row 1 and column 2 says that the ground truth classified those test data points as category 1 but the classification model classified those same test data points as category 2. All of the squares along the diagonal of the table display the number of test cases when the ground truth and classification model are in agreement. All squares off the diagonal represent any disagreement between the ground truth and classification model; the further away from the diagonal, the larger the disagreement. If there is complete agreement between the ground truth and classification model, then all of the off diagonal squares will be zero. If there is complete disagreement between the ground truth and classification model, then all of the diagonal squares will be zero.

Runkeyboard_arrow_down

Inference

Run Tutorial

Instructions

- Select an AI use case.

- Select a model to run.

- Click MORE DETAIL to view more information about the model.

- Select a prepopulated image.

- Click RUN PREDICTION to view the model’s output.

- Click RESET or select another prepopulated image to run another prediction.

Publishkeyboard_arrow_down

Publish tutorial

Instructions

- Select an AI use case.

- If there are no available models, go to the create page, create a model, and save it.

- Select a destination to publish your model to.

- Click PUBLISH. This will send your model to the destination.

Assesskeyboard_arrow_down

Assess tutorial

Instructions

- Select an AI use case.

- To view just one model at a time, select a model from the drop down list.

Terminology

Kappa Score

Statistic that measures the agreement between two raters that classify items into mutually exclusive categories and takes into account the probability of random agreement. If two raters are in complete agreement, then the kappa score could be close to 1. If two raters are in complete disagreement, then the kappa score could be close to 0 or even negative if they are agreeing less than the probability of random agreement.

Confusion Matrix

A table that is used to display the performance of a classification model on a test dataset as compared to the ground truth. Each square has a unique row and column combination. The square in row 1 and column 2 says that the ground truth classified those test data points as category 1 but the classification model classified those same test data points as category 2. All of the squares along the diagonal of the table display the number of test cases when the ground truth and classification model are in agreement. All squares off the diagonal represent any disagreement between the ground truth and classification model; the further away from the diagonal, the larger the disagreement. If there is complete agreement between the ground truth and classification model, then all of the off diagonal squares will be zero. If there is complete disagreement between the ground truth and classification model, then all of the diagonal squares will be zero.

Bonuskeyboard_arrow_down

Saliency maps

Confusion Matrix Tutorial

AI in Pediatric Radiology